Anyone who has used a chatbot like ChatGPT has noticed this: after a while, the AI suddenly seems to "forget" what was said earlier. This can feel frustrating – but in fact, it is a direct consequence of how Large Language Models (LLMs) are designed.

Every LLM operates within a context window – essentially its short-term memory. This window defines how much text (measured in tokens) the model can take into account in one session.

Older models like GPT-3 handled around 4,000 tokens. Current versions of GPT-4 process 32,000–128,000 tokens, Anthropic Claude even up to 200,000 – enough to cover multiple novels. Still – once this limit is reached, older parts of the conversation are cut off (Casciato, 2025; Van Droogenbroeck, 2025).

To put this in perspective, a token roughly corresponds to three-quarters of a word in English. This means that a 4,000-token window could handle approximately 3,000 words – roughly the length of a short academic paper. Modern models with 200,000-token windows can process the equivalent of a full-length novel, yet even this substantial capacity has its limits in extended conversations.

When an AI "forgets," it's not experiencing memory loss in the human sense. Instead, the older parts of the conversation are literally removed from the input that gets processed. The model doesn't have access to information that falls outside its context window – it's as if that conversation never happened from the AI's perspective.

This process typically works on a "sliding window" basis. As new messages are added to a conversation, the oldest messages are systematically removed to make room. Some systems employ more sophisticated strategies, such as preserving the initial system instructions and recent exchanges while removing middle portions, but the fundamental constraint remains.

The limitation is not a flaw but a conscious trade-off. The computational cost of transformer models grows quadratically with input length. Without a cap, responses would become prohibitively slow and expensive.

Moreover, research like "Lost in the Middle" (Liu et al., 2023) shows that models do not handle long sequences uniformly – they focus more on the beginning and end of the input, while information in the middle tends to be overlooked.

This quadratic scaling means that doubling the context length roughly quadruples the computational requirements. For a company serving millions of users simultaneously, this represents enormous infrastructure costs and energy consumption. Even with the most advanced hardware, there are practical limits to how much context can be processed in real-time while maintaining reasonable response speeds.

The attention mechanism that powers these models also faces inherent challenges with very long sequences. As the context grows, the model must calculate relationships between exponentially more pairs of tokens, leading to what researchers call "attention dilution" – where important connections get lost in the noise of processing vast amounts of information.

These constraints manifest in various ways that users encounter daily. In customer service applications, an AI might lose track of a complex technical issue that spans multiple exchanges. In educational contexts, tutoring AIs may forget earlier explanations when helping students work through multi-step problems. Creative writing assistants might lose narrative consistency in longer stories, requiring users to repeatedly remind the AI about character details and plot points.

Professional applications feel these limitations acutely. Legal document analysis, medical consultation systems, and business strategy discussions often require maintaining context across extensive dialogues. The current memory constraints mean that such applications often require careful conversation management or external documentation to maintain continuity.

Researchers and developers are experimenting with multiple strategies:

Some companies are implementing clever workarounds. Vector databases can store conversation embeddings that capture semantic meaning, allowing systems to retrieve relevant past discussions without storing entire transcripts. Hierarchical summarization creates nested summaries at different levels of detail, preserving both broad context and specific details as needed.

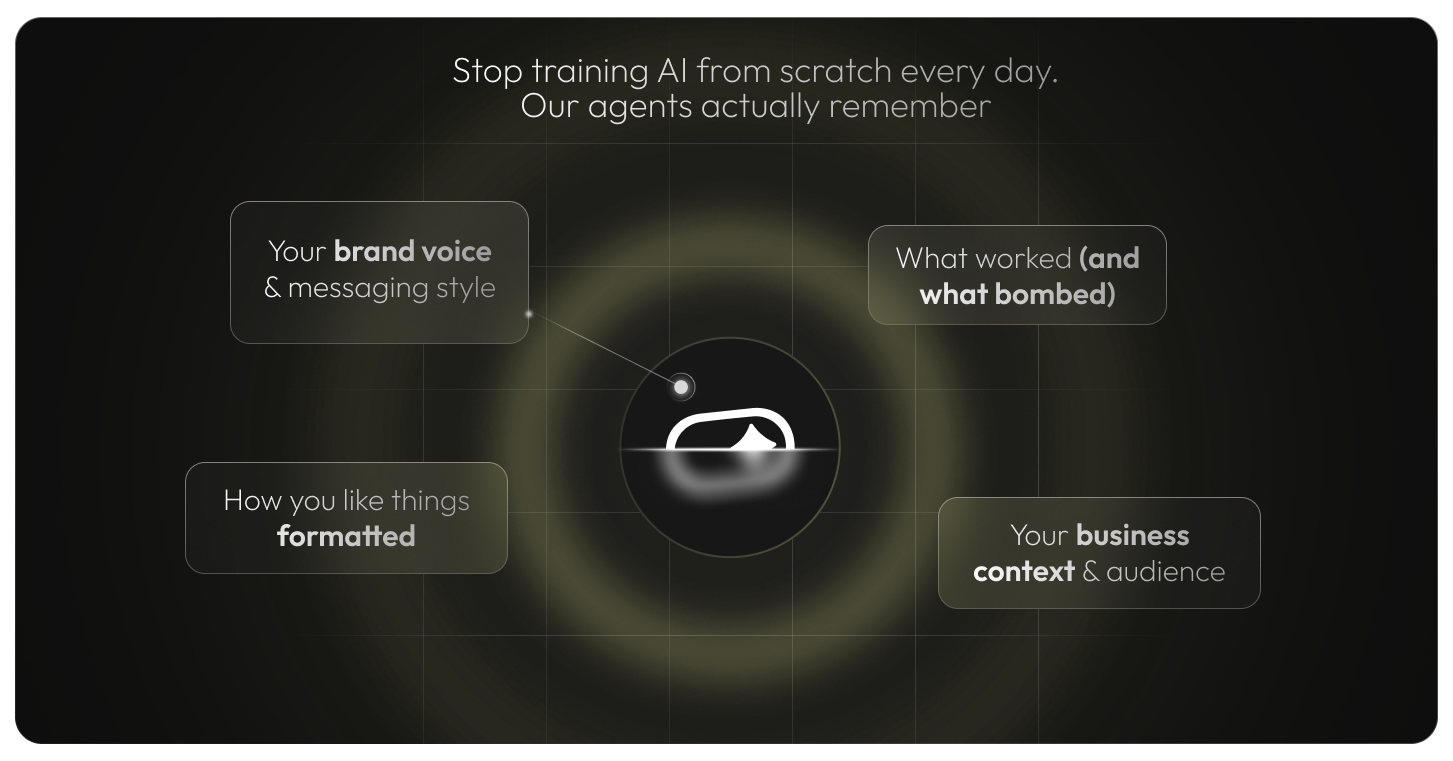

Interestingly, "forgetting" is not always a weakness – sometimes it is a feature. OpenAI (2024) and Google (2025) introduced controllable memory functions, allowing users to decide what is stored, or to opt for temporary "incognito chats" that are deleted automatically. This reflects a delicate balance between technological progress and data privacy.

The privacy implications of AI memory are profound. Persistent memory systems raise questions about data ownership, consent, and the right to be forgotten. Users might share sensitive information assuming it will be forgotten after the session ends, only to discover it has been permanently stored. Conversely, users building long-term relationships with AI assistants may want certain preferences and context to persist.

Different regulatory environments are taking varied approaches to these challenges. European GDPR requirements emphasize user control over personal data, while other jurisdictions focus more on disclosure and consent mechanisms. This regulatory patchwork creates complex challenges for global AI systems that must balance memory capabilities with privacy compliance.

The forgetting behavior of AI systems also creates interesting psychological dynamics. Users often develop expectations based on human conversation patterns, where forgetting is gradual and selective rather than sudden and complete. This mismatch can create frustration and reduce trust in AI systems.

However, some users appreciate the clean slate that comes with AI forgetting. Sensitive conversations, personal struggles, or embarrassing moments don't carry forward indefinitely. This creates a unique space where users can experiment with ideas or seek help without fear of long-term judgment or recall.

Major AI developers are taking different strategies to address memory limitations. Some focus on expanding context windows through more efficient architectures. Others emphasize external memory systems that can be selectively accessed. Still others are exploring biological metaphors, implementing memory systems that mirror human forgetting curves and prioritization mechanisms.

The competitive landscape around AI memory is intensifying. Companies that successfully solve the memory problem while maintaining speed, cost-effectiveness, and privacy protection may gain significant advantages in applications requiring long-term user relationships.

The central question is no longer if AI will have memory, but how. A system that remembers user preferences appears more competent, helpful, and human. At the same time, long-term memory requires careful governance to avoid risks of over-retention.

The future likely holds a spectrum of memory options rather than a one-size-fits-all solution. Different applications will demand different memory architectures – from ephemeral systems for privacy-sensitive tasks to comprehensive memory systems for long-term partnerships. Users may gain fine-grained control over what their AI assistants remember, forget, and prioritize.

We are at a turning point – today's "forgetful" systems are laying the groundwork for tomorrow's consistent, memory-enabled assistants. The challenge lies not just in building systems that can remember, but in building systems that remember wisely, ethically, and in service of human flourishing.

References (selection)